Redis vs. Memcached vs. Hazelcast: Which Cache Should You Choose?

Master the internal architecture of Redis (Single-Threaded), Memcached (Multithreaded), and Hazelcast (IMDG). Learn when to use Cache-Aside, how to handle Eviction Policies, and how to scale.

The performance of modern software is defined by a single physical constraint: the speed of data retrieval.

As applications scale to support thousands or millions of users, the primary database often becomes the bottleneck.

Most traditional databases persist information on hard disks or solid-state drives.

While these storage mediums offer reliability and vast capacity, they are mechanically and electronically slower compared to the Central Processing Unit (CPU).

When a processor must wait for data to arrive from a disk, it sits idle. This idle time translates to latency.

In a high-traffic environment, thousands of read requests pile up, causing the database to slow down and the user experience to degrade. The latency of fetching data from a disk is measured in milliseconds, whereas fetching data from main memory is measured in nanoseconds.

To bridge this performance gap, system architects introduce a caching layer. This is a temporary storage system that resides in the Random Access Memory (RAM). By keeping frequently accessed data in RAM, the system can serve requests almost instantly, bypassing the slow database operations.

While the concept is straightforward, selecting the right technology to implement this layer is a complex architectural decision.

The three dominant technologies in this space are Memcached, Redis, and Hazelcast. Each tool is built upon a different philosophy and offers distinct advantages.

This guide explores the internal mechanics of each to provide a clear understanding of how they function in a distributed system.

Key Takeaways

Performance Bottlenecks: System latency is primarily defined by data retrieval speeds; caching bridges the gap between slow disk storage (milliseconds) and fast RAM (nanoseconds).

Caching Mechanics: In-memory stores typically use a Cache-Aside pattern and Least Recently Used (LRU) eviction to manage limited memory capacity efficiently.

Memcached Architecture: A multithreaded key-value store using Slab Allocation to prevent fragmentation. It excels at vertical scaling for simple, volatile data.

Redis Architecture: A single-threaded data structure server that supports complex types (Lists, Sets) and disk persistence (RDB/AOF). It is best for versatile, general-purpose application logic.

Hazelcast Architecture: A Java-based In-Memory Data Grid (IMDG). It features an Embedded Mode for local memory access and “Compute-to-Data” capabilities for distributed processing.

Selection Guide: Choose Memcached for raw multithreaded throughput, Redis for complex data structures and persistence, or Hazelcast for distributed Java clustering.

How Caching Works

Before analyzing the specific tools, it is essential to understand the fundamental mechanics of an in-memory key-value store. Unlike relational databases that use tables and rows, a cache typically uses a simple dictionary structure. Every piece of data is assigned a unique identifier, known as the key. The data itself is the value.

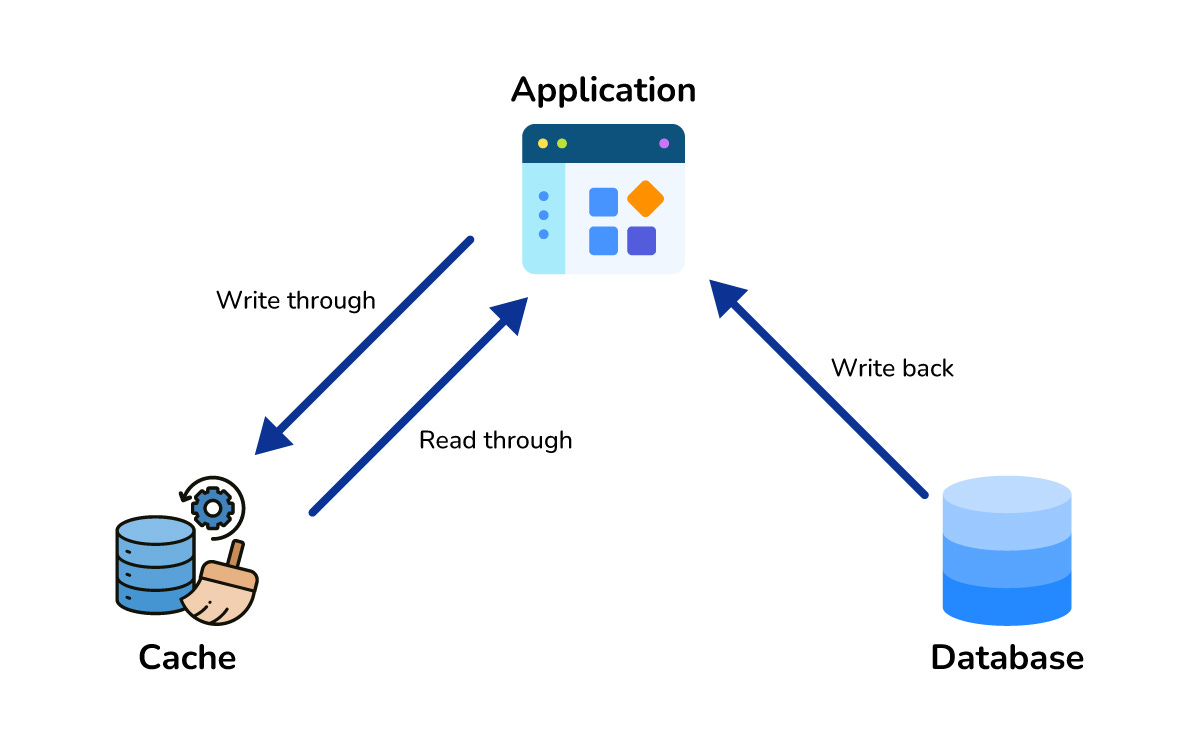

The standard workflow for a caching layer follows a specific pattern known as Cache-Aside or Lazy Loading:

The Request: The application requires a specific piece of data.

Cache Check: The application first queries the cache using the key.

Cache Hit: If the data exists in the cache, it is returned immediately. This is the optimal outcome.

Cache Miss: If the data is absent, the application queries the primary database.

Refill: The application retrieves the data from the database, returns it to the user, and simultaneously writes a copy to the cache.

Since RAM is significantly more expensive and limited in capacity than disk storage, a cache cannot store every piece of data indefinitely. It must use an Eviction Policy to manage memory usage.

The most common policy is Least Recently Used (LRU). When the cache reaches its memory limit, the system identifies the item that has not been accessed for the longest period and deletes it to make space for incoming data.

With this foundation established, we can examine how Memcached, Redis, and Hazelcast implement these concepts differently.

Memcached: The Multithreaded Specialist

Memcached is one of the original distributed caching systems. Its design philosophy focuses on extreme simplicity and raw throughput. It provides a volatile, in-memory key-value store that distributes data across multiple servers.

Multithreaded Architecture

The defining technical feature of Memcached is its multithreaded architecture. A thread is a sequence of instructions that a CPU can execute. Modern servers are equipped with multi-core processors that can execute many threads simultaneously.

Memcached is designed to utilize these multiple cores. If a server has 64 CPU cores, Memcached can assign different threads to handle incoming requests in parallel. This allows a single Memcached server to handle a massive volume of concurrent connections. This capability makes Memcached excellent for vertical scaling, which means increasing performance by upgrading the hardware of a single server.

Memory Management via Slab Allocation

One of the challenges in memory management is fragmentation. This occurs when memory is allocated and deallocated repeatedly, leaving small, unusable gaps of memory scattered throughout the RAM.

Memcached solves this using a technique called Slab Allocation. Instead of asking the operating system for memory every time a new item is stored, Memcached reserves large blocks of memory upfront. It divides these blocks into chunks of specific sizes. When a new item arrives, Memcached places it into the smallest available chunk that fits. This ensures that memory is used efficiently and performance remains stable over long periods of operation.

Data Opacity

Memcached treats data as opaque. It does not understand or care about the structure of the data it stores. To Memcached, every value is simply a blob of bytes. If a developer wants to store a user profile, the application must serialize that object into a byte string before sending it to Memcached. When the data is retrieved, the application must deserialize it back into a usable object. This simplicity keeps the system lightweight but limits the operations you can perform on the data.

Redis: The Data Structure Server

Redis stands for Remote Dictionary Server. While it fulfills the same role as Memcached, its internal architecture and capabilities are vastly different. It is often described not just as a cache, but as a data structure server.